Experiment #1 | 3 LLMs try a GMAT Quant Question

Comparing the outputs from Claude, ChatGPT, and DeepSeek (free versions!) when asked to solve a logic-based GMAT Quant question.

GENERAL

Harsha

2/19/20252 min read

I happened to come across an interesting GMAT Quant question today, in the topic of Overlapping Sets (where we typically make those 3-circle “Venn diagrams”).

I realized that the problem needed practically 0 Math and could be solved by pure logic. And then, I had a thought - how would Claude, ChatGPT, and DeepSeek fare against this question? Would they be able to solve it correctly? Would their solution rely on pure math or logic? Are the LLMS already better than us :)? Etc.

Here is a link to the question, in case anyone is interested in solving it first: Here

Here is how I solved this → Here

Skip this portion and move on if you want to solve the question yourself first.

*******************************

1) Given that there are 40 applicants, it is possible that there is ZERO overlap of applicants between A and B. 15 applicants admitted by A and a completely different 17 applicant admitted by B - is a possible scenario.

2) In this scenario, irrespective of which of the 40 applicants are admitted by C, because there is not a single applicant admitted by both A and B, the number of applicants admitted by all 3 (A, B, and C) is 0.

3) Hence, the answer to the question asked (at the very least, how many applicants get the admission) should be 0.

*******************************

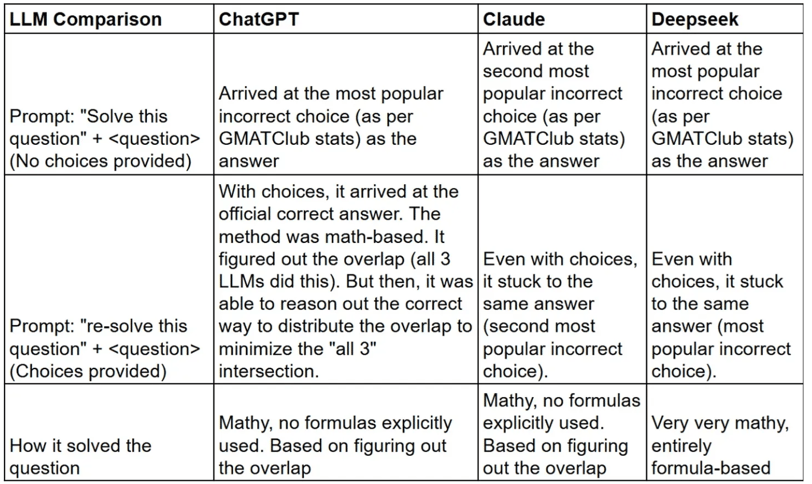

Now, here is how the free versions of ChatGPT, Claude, and DeepSeek compared when given the same question (first, they were asked to solve without choices provided; then, they were asked to re-try the question with choices provided).

To summarize →

When asked to solve the question without answer choices, initially, all 3 gave incorrect answers.

When asked to resolve with the answer choices, ChatGPT corrected itself and arrived at the correct answer. The method was mathematics-based, but did not explicitly go into formulas.

Claude and DeepSeek stuck to their answer choices even with the choices provided.

While Claude and ChatGPT used comparable math-based approaches but refrained from showing very formulaic work, DeepSeek followed a completely formula-based approach. None of the LLMs applied the simple logic we have used above to solve the question.

Overall, what does this mean? I do not know. I am very sure that LLMs are getting better every day. I also use them extensively for my work. But at least as of now (and perhaps, only for now?), the LLMs still have some distance to cover in terms of certain types of logical reasoning.

——

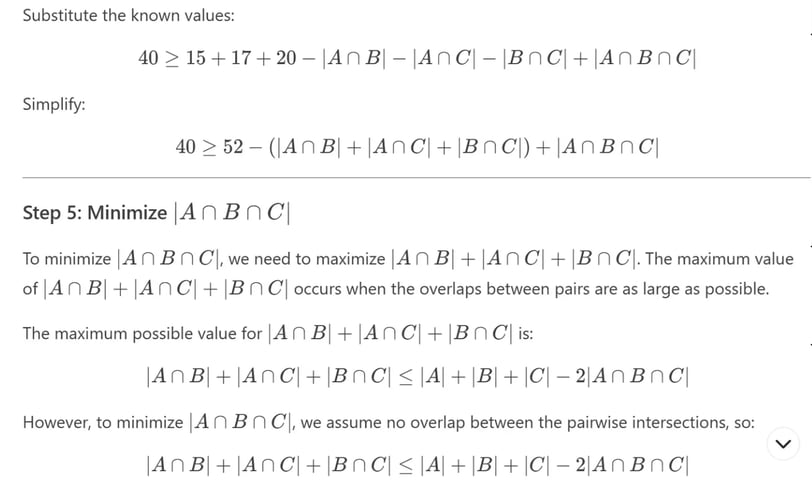

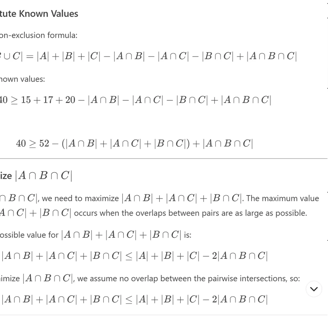

P.S - Here is a glimpse of how DeepSeek approached this problem. Very interesting!

Anyway, that is it for today.

Harsha

Prepsilon Learning

Helping diligent aspirants crack the GMAT through private tutoring.

© 2025. All rights reserved.

Disclaimer: “GMAT” and other GMAC™ trademarks are registered trademarks of the Graduate Management Admission Council™. The Graduate Management Admission Council™ does not endorse, nor is affiliated in any way with Prepsilon Learning.

Information Links

Contact

Email: harsha@prepsilonlearning.com

Whatsapp: +91 - 9632661553